Sampling Distribution

Sampling Distribution

Sampling Distribution

Simulating Unicorns

Central Limit Theorem

Common Sampling Distributions

Sampling Distributions for Regression Models

Scientific Notation

Sampling Distribution

Sampling Distribution is the idea that the statistics that you generate (slopes and intercepts) have their own data generation process.

In other words, the numerical values you obtain from the lm and glm function can be different if we got a different data set.

Some values will be more common than others. Because of this, they have their own data generating process, like the outcome of interest has it’s own data generating process.

Sampling Distributions

Distribution of a statistic over repeated samples

Different Samples yield different statistics

Standard Error

The Standard Error (SE) is the standard deviation of a statistic itself.

SE tells us how much a statistic varies from sample to sample. Smaller SE = more precision.

Modelling the Data

\[ Y_i = \beta_0 + \beta_1 X_i + \varepsilon_i \]

- \(Y_i\): Outcome data

- \(X_i\): Predictor data

- \(\beta_0, \beta_1\): parameters

- \(\varepsilon_i\): error term

Error Term

\[ \varepsilon_i \sim DGP \]

Randomness Effect

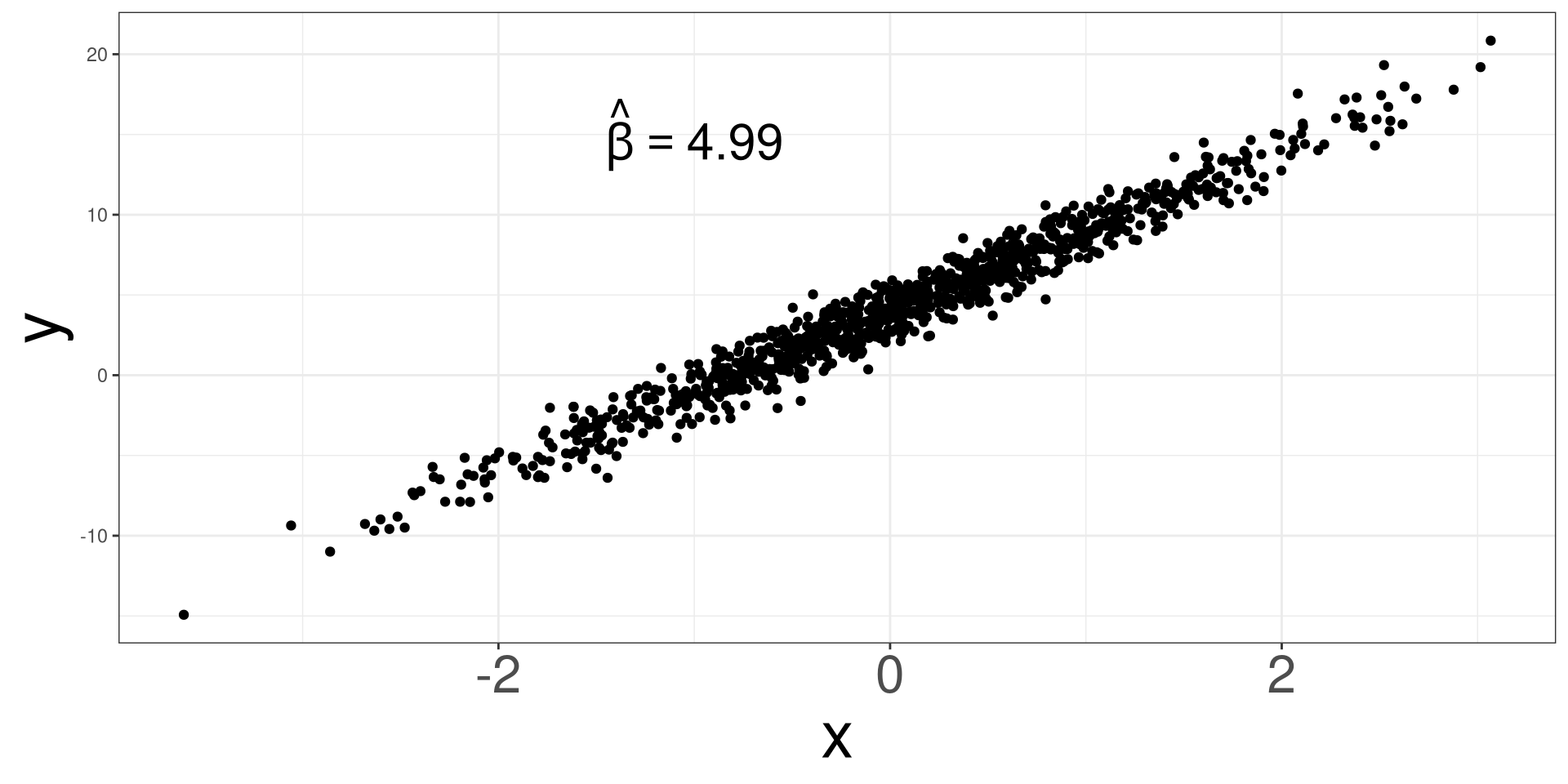

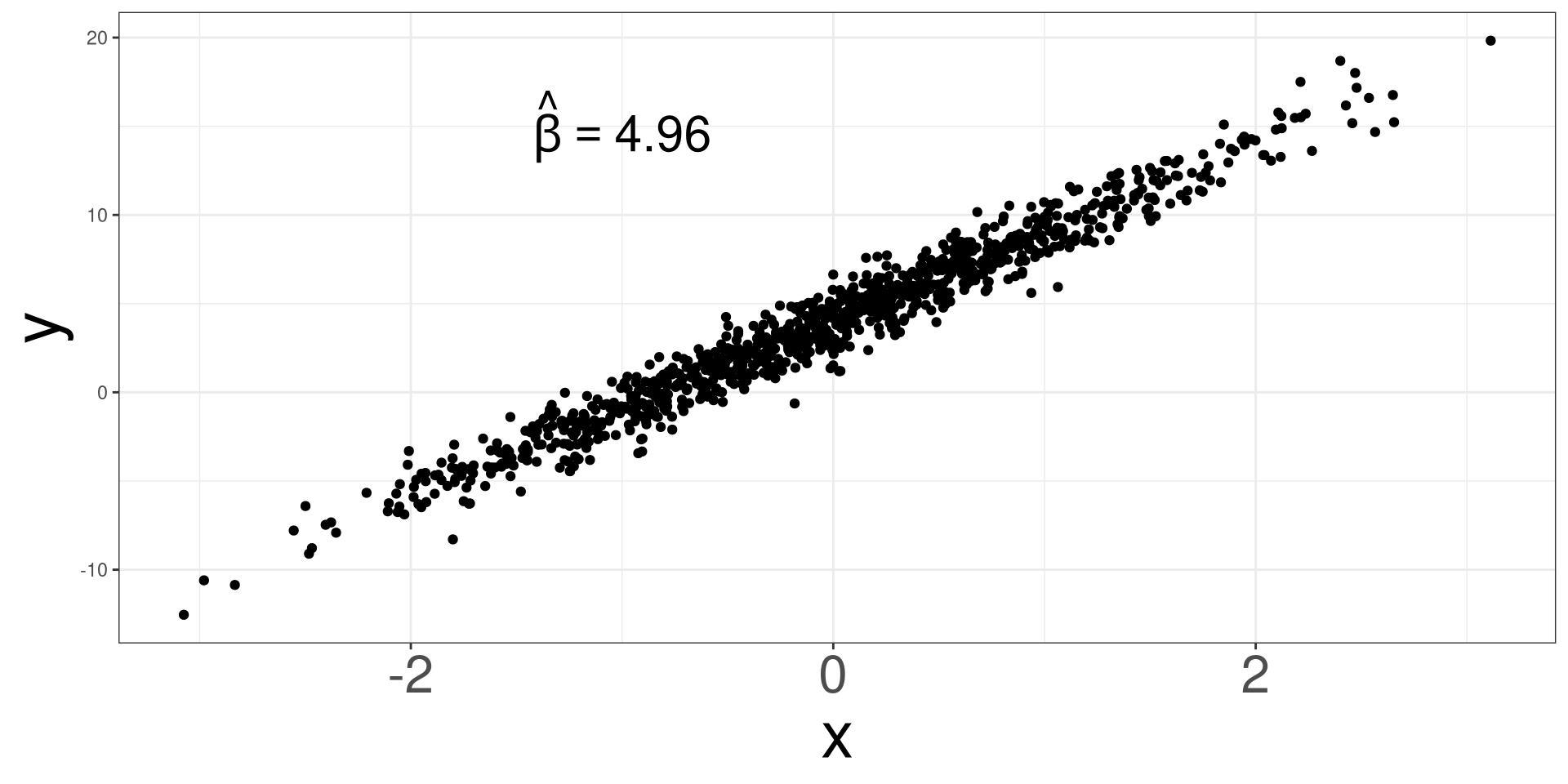

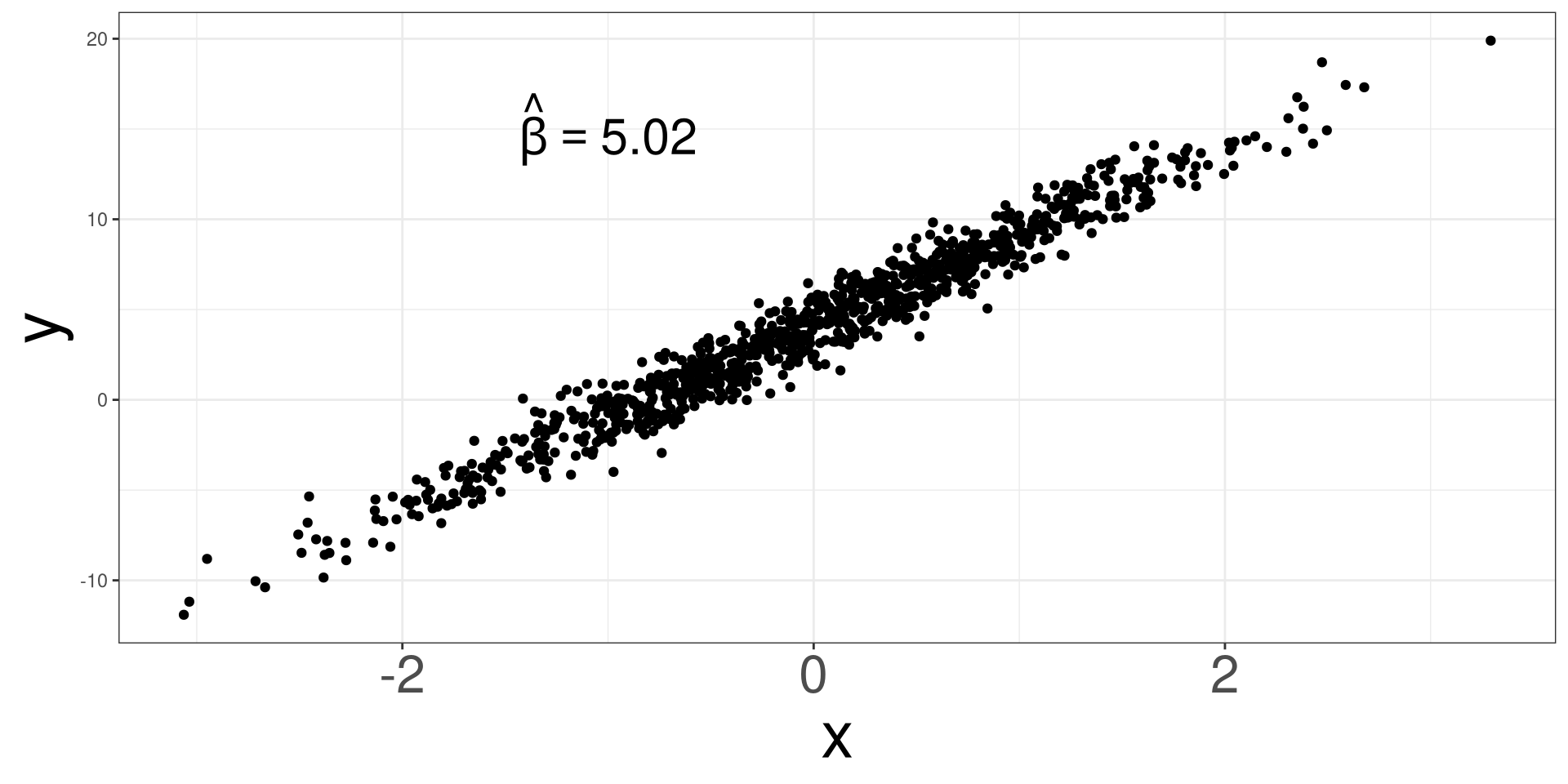

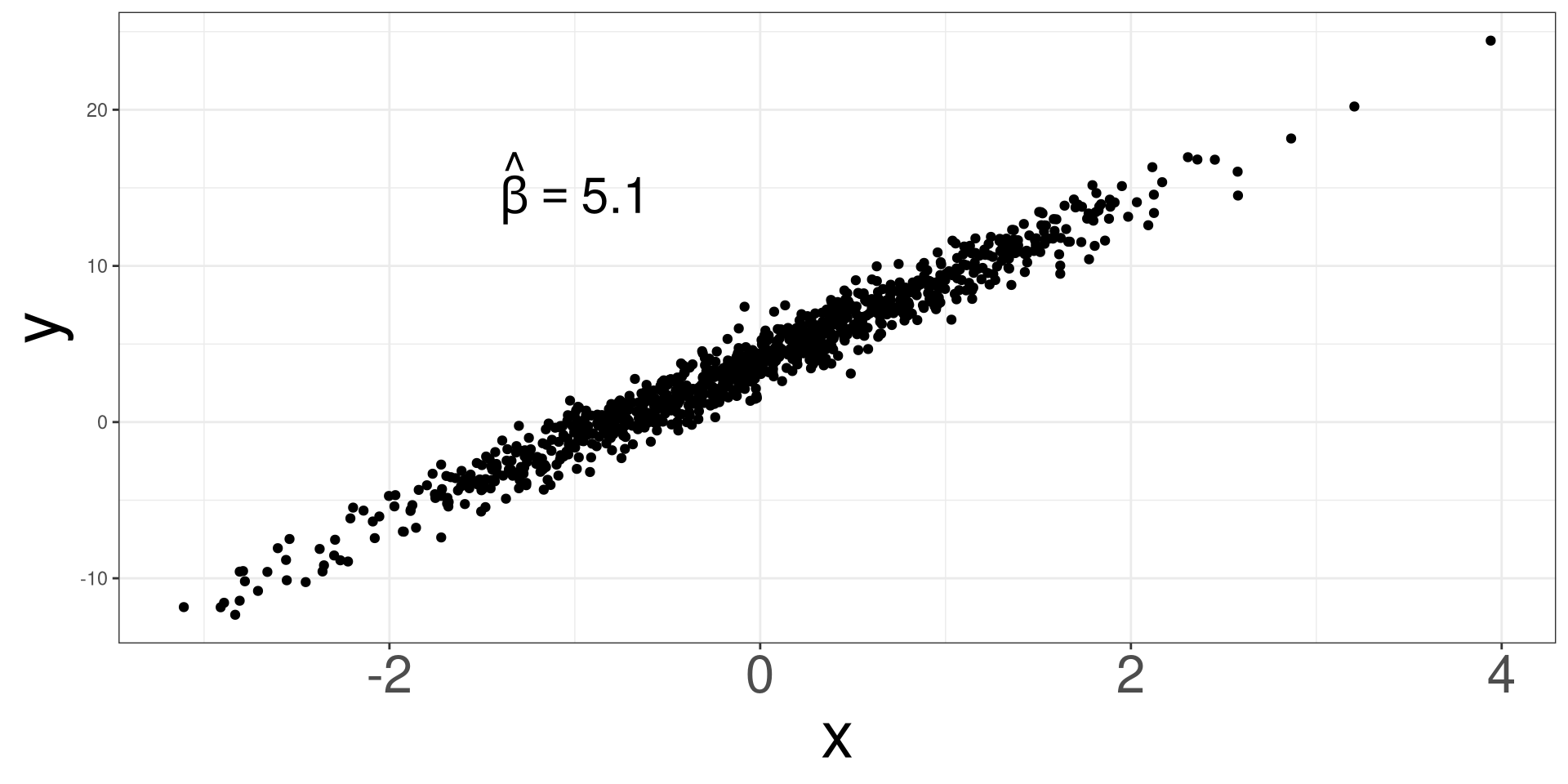

The randomness effect is a sampling phenomenom where you will get different samples every time you sample a population.

Getting different samples means you will get different statistics.

These statistics will have a distribution on their own.

Randomness Effect 1

Randomness Effect 2

Randomness Effect 3

Randomness Effect 4

Randomness Effect 5

Simulating Unicorns

Sampling Distribution

Simulating Unicorns

Central Limit Theorem

Common Sampling Distributions

Sampling Distributions for Regression Models

Scientific Notation

Simulating Unicorns

To better understand the variation in statistics, let’s simulate a data set of unicorn characteristics to visualize and understand the variation.

We will simulate a data set using the unicorns function and only we need to specify how many unicorns you want to simulate.

Simulating Unicorn Data

#> Unicorn_ID Age Gender Color Type_of_Unicorn Type_of_Horn Horn_Length

#> 1 1 16 Female Black Jewel Aquamarine 4.776032

#> 2 2 10 Male Gray Jewel Aquamarine 4.902155

#> 3 3 19 Genderfluid Black Jewel Aquamarine 4.955401

#> 4 4 8 Genderfluid Brown Rainbow Opal 5.399394

#> 5 5 16 Female Silver Ruvas Opal 4.863914

#> 6 6 16 Male Gray Ember Aquamarine 4.986831

#> 7 7 3 Non-binary Gray Rainbow Aquamarine 5.242034

#> 8 8 19 Non-binary Black Ruvas Opal 4.863053

#> 9 9 19 Agender Silver Jewel Opal 5.060944

#> 10 10 11 Genderfluid Silver Ruvas Opal 4.800689

#> Horn_Strength Weight Health_Score Personality_Score Magical_Score

#> 1 30.44000 133.87608 5 4.98691924 11167.44

#> 2 28.77776 161.11930 8 0.08855563 10962.74

#> 3 27.47913 103.45752 4 1.47732785 11235.13

#> 4 28.59666 127.14958 4 0.98056570 10913.71

#> 5 26.96410 64.40391 5 0.62557179 11175.92

#> 6 26.74551 133.13423 3 1.45529612 11121.65

#> 7 26.11884 153.23826 8 0.12214552 10753.48

#> 8 30.55474 233.38426 9 0.37342511 11240.85

#> 9 30.98367 129.75512 10 1.85586155 11251.30

#> 10 26.75460 119.30745 1 1.71927208 11023.71

#> Elusiveness_Score Gentleness_Score Nature_Score

#> 1 30.12572 48.41593 967.7119

#> 2 27.37831 19.39574 942.4597

#> 3 39.24923 10.64968 976.4082

#> 4 33.83320 32.86003 936.4327

#> 5 35.21064 88.25330 969.2473

#> 6 41.10721 43.18547 961.9797

#> 7 37.07009 -14.55553 916.2825

#> 8 37.27276 17.55056 977.2059

#> 9 39.40224 -30.59686 978.5172

#> 10 38.09775 -3.68161 950.1891Unicorn Data Variables

#> [1] "Unicorn_ID" "Age" "Gender"

#> [4] "Color" "Type_of_Unicorn" "Type_of_Horn"

#> [7] "Horn_Length" "Horn_Strength" "Weight"

#> [10] "Health_Score" "Personality_Score" "Magical_Score"

#> [13] "Elusiveness_Score" "Gentleness_Score" "Nature_Score"We will only look at Magical_Score and Nature_Score.

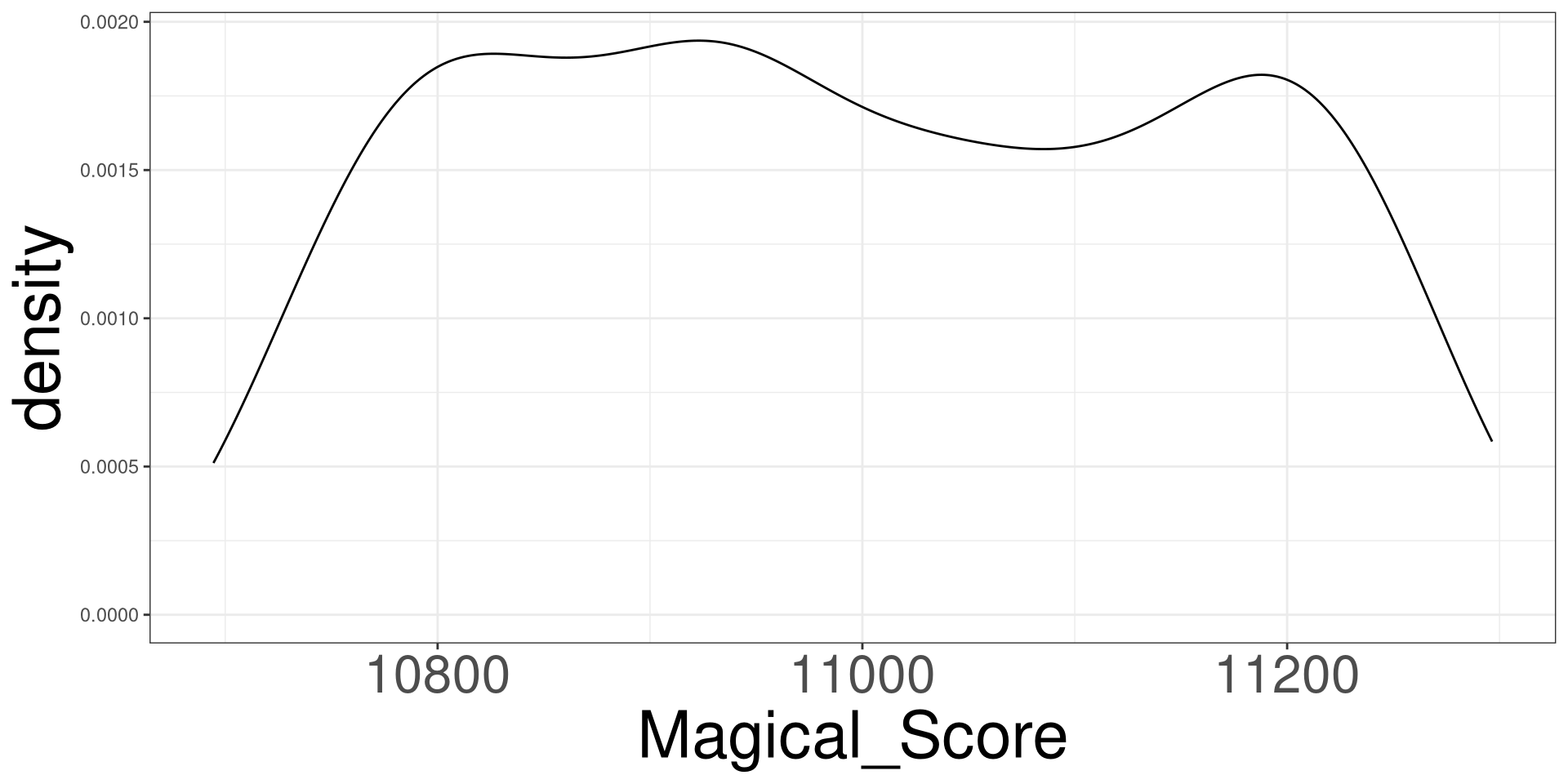

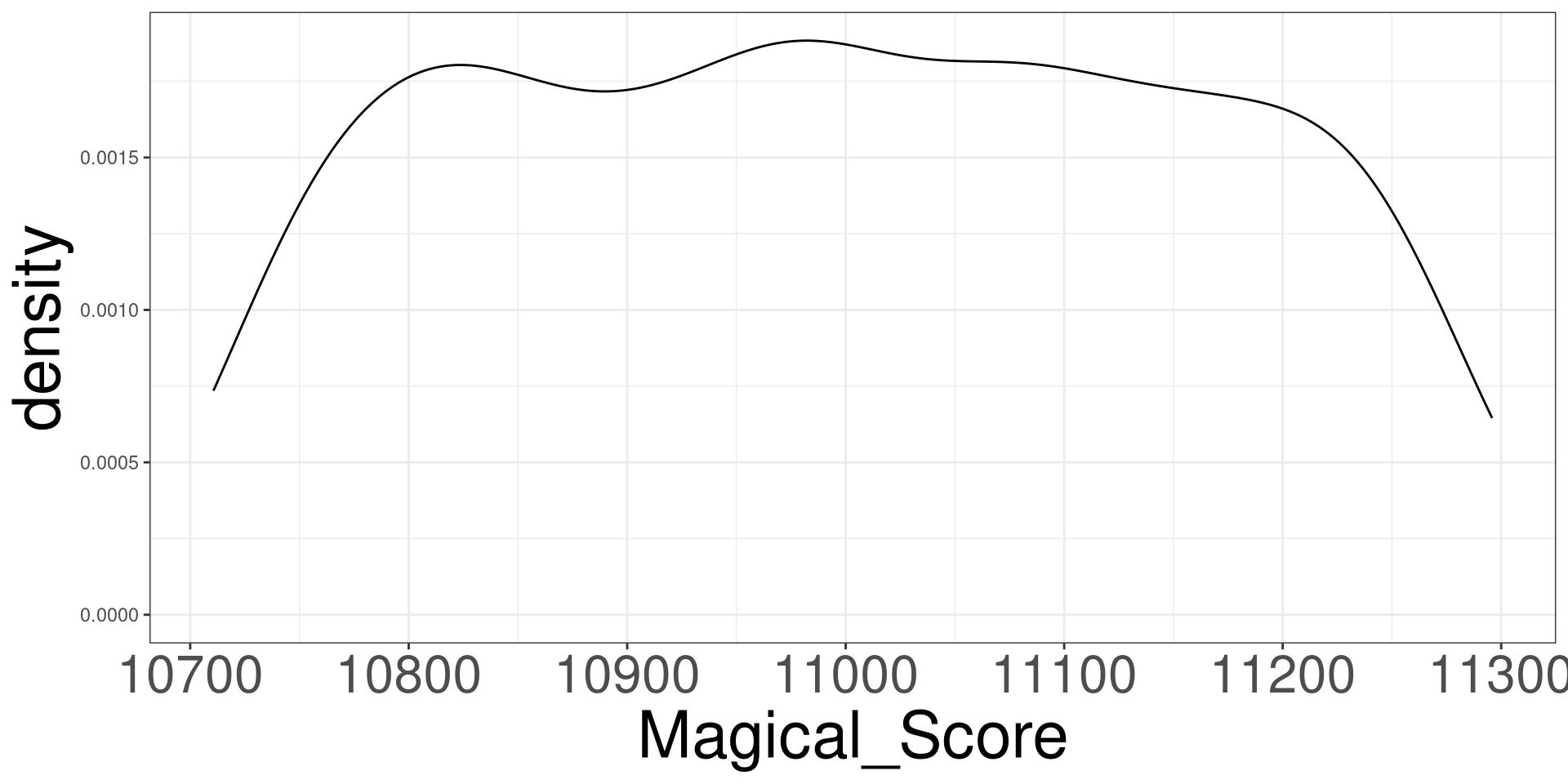

Magical and Nature Score

\[ Magical = 3423 + 8 \times Nature + \varepsilon \]

\[ \varepsilon \sim N(0, 3.24) \]

Simulating \(N(0, 3.24)\)

Collecting

#> Nature_Score Magical_Score

#> 1 942.9755 10968.18

#> 2 968.7400 11170.79

#> 3 939.5305 10938.30

#> 4 929.0939 10857.29

#> 5 950.3639 11028.74

#> 6 948.9817 11014.53

#> 7 913.7070 10735.23

#> 8 961.3650 11111.40

#> 9 971.7121 11198.61

#> 10 927.7923 10847.21DGP of Magical Score 1

DGP of Magical Score 2

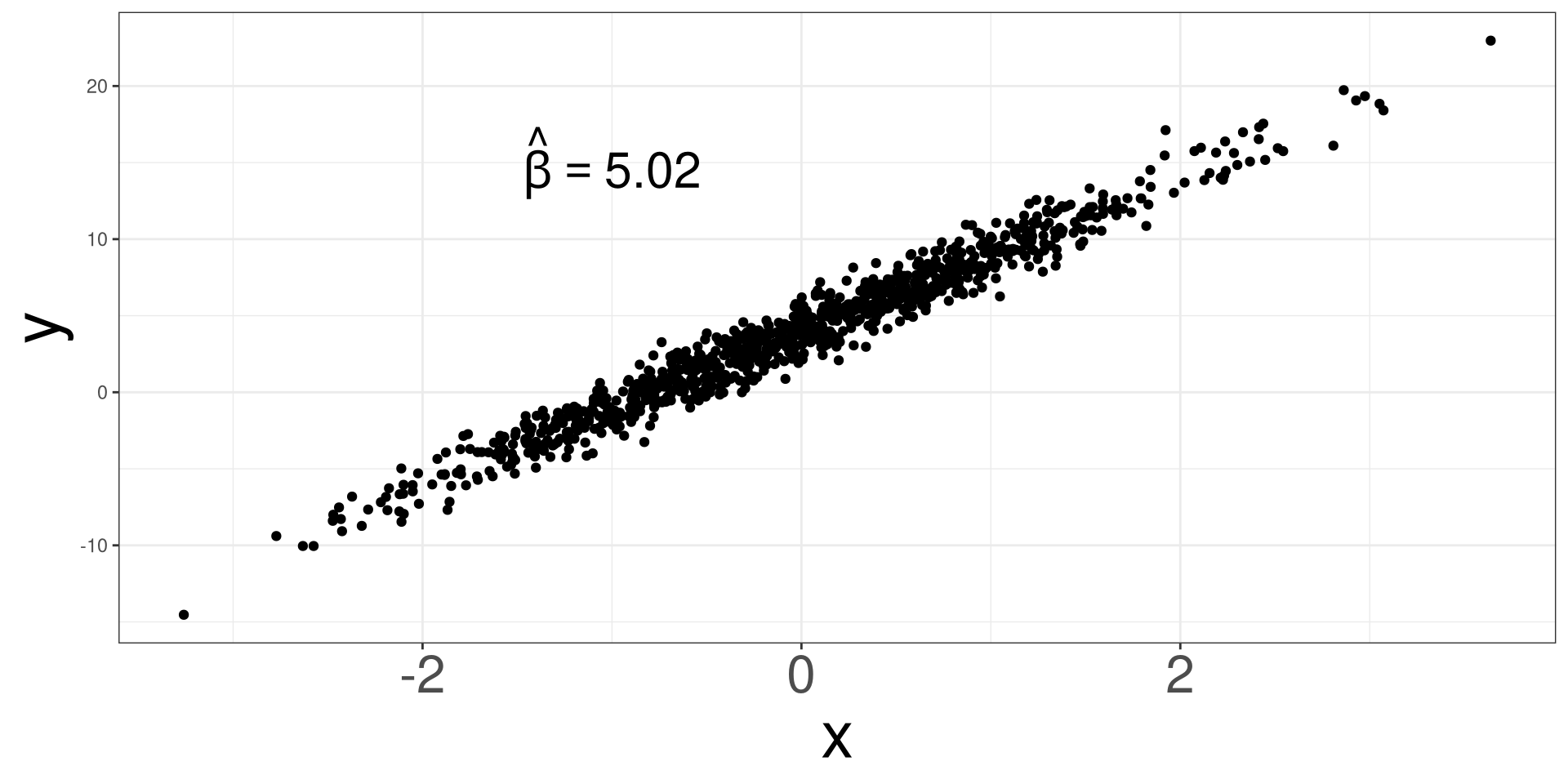

Estimating \(\beta_1\) via lm

Collecting a new sample

Collecting a new sample

Collecting a new sample

Replicating Processes

N: number of times to repeat a processCODE: what is to repeated

Extracting \(\hat \beta\) Coefficeints

MODEL: a model that can be used to extract componentsINDEX: which component do you want to use0: Intercept1: first slope2: second slope...

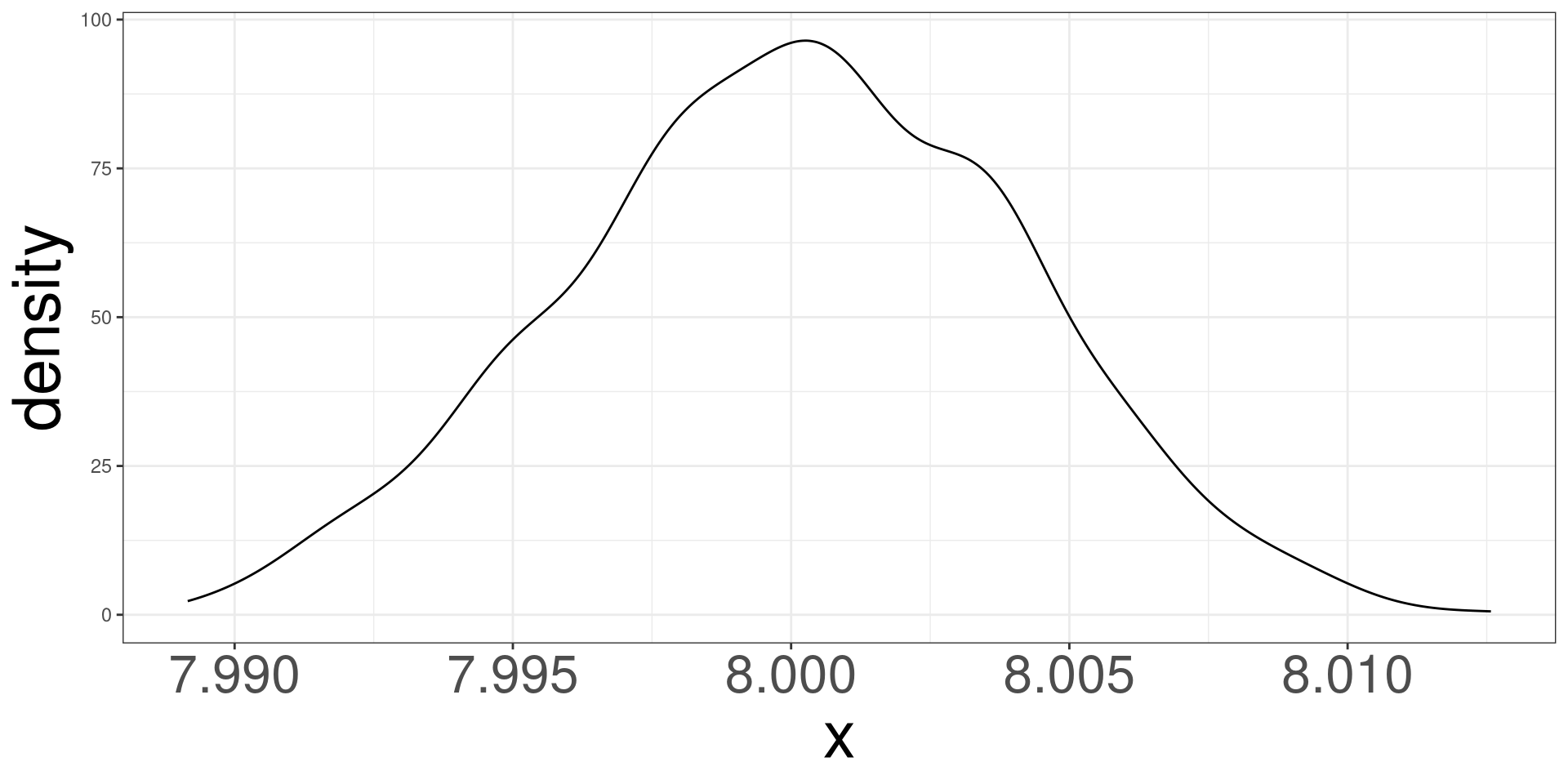

Collecting 1000 Samples

#> [1] 8.003249 8.006213 7.997957 7.997386 8.002820 8.006290 7.999789 8.007366

#> [9] 7.999132 7.997001 8.005017 8.005557 7.994820 7.996933 8.003658 7.997881

#> [17] 7.997294 8.002042 7.999663 8.000680 8.002013 8.004814 8.003004 7.998409

#> [25] 8.005042 7.998367 8.000374 8.000422 7.994410 7.997193 7.999950 8.006605

#> [33] 7.996852 7.999575 7.999634 7.995535 8.004872 8.001702 8.008297 8.005318

#> [41] 7.999827 7.999123 8.002595 8.001901 8.002505 8.010175 7.999175 8.000372

#> [49] 8.005617 7.996935 8.008285 8.006177 8.005751 7.998273 8.006264 8.000798

#> [57] 8.006827 7.995251 7.995436 8.004149 8.003080 7.997236 7.991721 7.994745

#> [65] 7.997494 8.004016 8.004658 7.990876 8.005706 7.988520 8.001780 7.999887

#> [73] 7.995353 8.001922 7.999556 7.991823 8.001197 8.002627 7.995131 7.994328

#> [81] 8.003638 7.998205 7.998748 7.998788 7.995536 7.995187 8.002954 7.990515

#> [89] 7.998332 7.999071 8.000322 8.009081 8.000420 7.991491 8.004528 7.995130

#> [97] 8.004004 8.003340 7.991975 8.002095 7.997072 7.999128 7.999161 8.000803

#> [105] 7.999983 8.005219 8.007856 7.995413 7.997072 7.991789 8.002836 7.994918

#> [113] 7.998364 7.998065 8.003765 7.999100 7.999092 8.005690 7.996150 7.997049

#> [121] 7.995422 8.001309 8.004653 7.999368 8.005464 7.998110 7.999314 8.000494

#> [129] 7.998950 8.001766 8.002499 8.003890 7.999351 8.010010 8.000136 7.998035

#> [137] 7.997959 7.998226 8.005156 7.996809 8.005947 7.998626 7.999683 8.002838

#> [145] 7.994185 8.007777 7.997660 7.998434 8.005570 7.999620 8.007739 8.001344

#> [153] 8.001876 7.999694 8.003923 8.003351 7.995889 7.996972 8.004136 7.994322

#> [161] 8.004582 8.004782 8.002548 8.001830 7.994419 7.990765 8.002389 8.000775

#> [169] 7.993585 7.999072 7.998273 7.995158 8.001326 8.000800 8.000028 8.001433

#> [177] 7.996632 7.998332 8.001263 7.995387 8.008242 7.998529 8.005821 7.995127

#> [185] 8.002382 8.003080 8.000058 8.002247 7.997168 8.000361 7.999333 7.999626

#> [193] 7.999869 7.996384 7.999879 8.004884 7.993368 7.996749 7.996700 7.995424

#> [201] 7.995013 8.002082 8.003597 8.005883 7.994742 8.002255 7.999620 7.998464

#> [209] 8.001769 7.997508 8.002905 8.007017 7.995149 8.003207 8.000890 7.999669

#> [217] 8.003337 7.994696 7.994707 8.004448 7.992009 7.999922 7.999756 8.004726

#> [225] 7.992690 7.995041 7.996850 8.002145 8.009995 7.999959 7.996165 8.003109

#> [233] 7.996013 8.003608 8.000127 8.003765 7.990617 7.997324 8.000803 7.993494

#> [241] 8.000370 8.003808 7.992575 7.996933 7.996994 8.000634 8.002016 7.998484

#> [249] 7.998385 8.000795 8.006065 8.001836 8.008047 8.001499 8.001697 8.002213

#> [257] 7.992823 8.003844 8.001221 8.000496 7.996187 7.996132 7.999327 7.999962

#> [265] 8.000931 8.003726 7.997111 8.003158 8.001485 8.000251 8.002080 7.997654

#> [273] 7.998111 7.993538 8.004725 7.995502 8.001124 7.992734 8.003572 8.002938

#> [281] 7.999594 7.998425 7.996461 7.996490 7.997992 8.003917 8.001102 8.000252

#> [289] 8.000327 7.999393 8.002145 7.994992 8.004531 8.006544 8.001795 8.005997

#> [297] 8.000187 7.998486 8.001398 7.999978 7.998351 7.999133 8.006761 8.001558

#> [305] 7.999189 8.001122 8.001045 8.007316 8.001788 7.999959 8.008194 8.000107

#> [313] 8.000926 7.999595 8.006518 8.007450 7.996197 8.000849 8.001812 8.004385

#> [321] 8.003489 7.999886 7.999740 8.003435 7.992848 7.997752 7.999748 8.003589

#> [329] 8.001024 8.000671 8.004918 8.000737 8.002496 8.003806 8.000524 8.004561

#> [337] 7.997760 7.996918 8.003566 7.996037 8.000180 7.998859 8.002022 7.996059

#> [345] 8.006707 7.999356 7.998631 8.007723 7.997093 8.002265 7.999603 8.001748

#> [353] 8.005883 8.001997 8.004857 8.003702 7.995881 7.991818 8.000019 7.997841

#> [361] 7.998118 8.002773 7.994097 7.998669 8.000589 8.002167 8.008173 7.994426

#> [369] 8.003815 7.993044 8.000878 7.995460 8.003273 7.999802 8.006627 8.003252

#> [377] 7.995597 7.999080 8.004179 8.003131 7.996516 8.002826 7.999095 7.997181

#> [385] 7.992385 8.004625 7.998466 7.999727 7.993886 8.002841 8.001732 7.996661

#> [393] 8.000603 8.001677 7.999998 7.997567 8.000222 8.000756 8.004544 8.000947

#> [401] 7.997040 8.005430 8.001218 8.001558 8.002232 8.000769 8.003476 7.999761

#> [409] 7.997106 7.996432 8.002529 8.002680 8.003653 8.004384 8.003580 8.006960

#> [417] 7.999115 7.999356 7.999725 8.005288 7.998386 7.994650 8.003615 8.001431

#> [425] 7.997461 8.001630 8.000794 8.006773 8.000724 7.994857 8.003622 7.998768

#> [433] 7.996143 8.002134 7.997128 7.996066 7.997266 7.995679 7.997561 7.994562

#> [441] 7.998796 7.999311 7.998239 7.998540 8.000455 8.008368 8.002420 8.000679

#> [449] 7.998102 7.998960 7.992243 8.006373 8.004770 7.993479 8.002840 7.993314

#> [457] 8.006509 8.005740 8.001913 8.004118 8.001852 7.999330 7.994910 7.995161

#> [465] 8.003803 7.999821 7.993035 7.990307 8.000245 8.003589 7.998373 8.000915

#> [473] 7.996105 7.991850 7.999565 8.000997 8.000153 7.995863 8.000065 7.998515

#> [481] 7.998846 8.001062 7.993166 8.002986 7.997530 7.995946 7.998264 8.001110

#> [489] 8.000858 7.995137 8.008483 8.000869 7.998562 7.996614 8.003904 8.005263

#> [497] 8.006753 7.997084 7.997529 7.989563 8.001522 7.998670 7.996021 7.995483

#> [505] 8.001770 8.001717 7.991533 7.999412 7.999645 7.994355 7.993114 8.000627

#> [513] 8.010076 8.003288 8.003777 8.005851 8.000043 8.001152 7.999197 7.998364

#> [521] 7.995635 7.998129 7.993719 8.000764 8.000355 8.000092 7.994164 7.998093

#> [529] 8.002111 8.005827 8.000869 8.002346 7.994580 7.995322 7.996652 8.006138

#> [537] 8.003977 8.003907 8.004049 7.994504 8.014377 7.998953 7.996947 8.000105

#> [545] 8.001883 8.004325 8.010698 8.003249 7.996150 7.989821 8.005344 7.999857

#> [553] 8.001999 7.992626 7.997846 7.997903 7.994397 8.003168 7.997489 8.004938

#> [561] 8.002456 7.999602 7.996637 7.998042 8.001956 7.995572 8.001843 8.000708

#> [569] 7.997919 8.003999 8.004178 7.995109 7.993574 7.994767 7.997390 8.001520

#> [577] 7.999361 8.004443 7.996834 8.004998 7.999541 7.999491 8.005824 8.004742

#> [585] 7.997602 7.999888 8.005370 8.001234 7.999335 7.996282 7.997528 7.997444

#> [593] 7.997842 7.996171 7.999733 7.994254 7.999398 7.997688 8.001622 8.004770

#> [601] 8.002612 8.002087 8.003470 8.000531 8.004210 8.005849 8.003935 8.007671

#> [609] 7.996234 8.001497 7.995899 7.996928 7.996431 8.006875 8.001129 7.997705

#> [617] 7.994693 7.999396 7.988884 8.003249 8.002490 7.999857 8.003456 8.001730

#> [625] 7.999365 7.995927 7.998436 7.997315 7.999018 8.004666 7.996638 8.000922

#> [633] 7.998162 7.993816 7.994366 8.001890 8.002941 7.992801 7.997216 8.000557

#> [641] 8.000729 8.000101 7.997768 7.997974 8.003744 7.998262 7.992696 8.005101

#> [649] 7.999609 7.993138 8.000426 7.994900 8.004284 8.012854 7.994210 7.999856

#> [657] 7.998415 7.997151 7.999853 7.998716 7.994087 8.002061 8.001650 8.005146

#> [665] 7.994835 7.997128 8.001002 7.999162 8.010244 7.987011 7.996244 8.006544

#> [673] 7.996552 7.998056 7.997690 8.002115 7.999330 8.000101 7.993337 8.001868

#> [681] 7.997708 7.998248 7.998024 7.996709 7.996197 8.007135 8.001489 8.001422

#> [689] 8.008039 8.009289 7.996225 8.000836 8.000082 7.999774 8.001081 7.992274

#> [697] 8.001135 7.997833 7.998273 7.996871 8.000761 8.000979 8.006200 8.001702

#> [705] 8.004011 8.006388 7.990568 8.000783 8.005143 8.002113 8.002188 7.997582

#> [713] 7.999763 7.997435 8.008409 7.996156 8.004533 8.004103 8.003101 8.002800

#> [721] 8.009371 8.000418 7.999501 8.001590 7.997991 7.999912 8.004775 7.995489

#> [729] 8.005278 8.008040 8.001640 8.002154 8.003519 8.000358 8.001789 8.006481

#> [737] 7.997452 7.999165 7.993300 7.993241 8.002614 8.004438 8.005179 7.999739

#> [745] 7.993115 8.003357 7.996687 7.997322 7.999771 8.001152 7.999695 8.003509

#> [753] 8.007651 7.994505 8.004972 8.012690 7.993007 7.995409 7.997158 8.005770

#> [761] 7.998445 7.993619 8.004286 8.003909 7.999051 7.998091 8.000428 7.995449

#> [769] 7.997594 7.995485 8.011446 7.999635 8.000065 7.997006 8.001500 7.998928

#> [777] 8.005524 7.997204 7.995080 7.995343 7.997477 7.994045 8.003951 8.004195

#> [785] 8.004118 8.004056 8.001690 8.002260 7.995364 8.003860 8.003883 8.007463

#> [793] 8.002588 7.997967 8.001126 7.996787 7.999212 8.005334 8.002778 8.002093

#> [801] 7.997217 8.001190 8.004823 8.000070 8.003892 8.000012 8.002786 7.993113

#> [809] 7.995466 7.999743 7.994070 7.996796 7.996422 8.000365 7.996788 7.997585

#> [817] 7.996056 7.989673 7.996982 7.993675 8.004065 8.006910 7.997645 7.998123

#> [825] 8.009883 7.998442 8.003505 8.002283 8.000163 8.004120 7.998061 7.999589

#> [833] 8.006205 7.999800 7.999730 8.009181 8.001889 7.995671 7.996214 7.997603

#> [841] 8.001443 7.996695 7.998633 7.999176 7.998028 8.003913 7.995438 8.003821

#> [849] 7.993965 8.005100 7.997139 8.004338 8.003756 7.990584 7.998772 7.998360

#> [857] 8.006856 8.006226 8.000738 8.003157 8.000312 8.008610 8.000772 7.998510

#> [865] 7.997484 7.999369 8.007896 8.000322 8.002530 8.005042 7.996720 7.993582

#> [873] 8.000999 7.999028 8.006570 7.994897 7.999858 8.001381 7.998004 8.004415

#> [881] 7.990003 7.997854 8.004259 8.001503 7.996231 7.998911 7.993561 7.998714

#> [889] 7.999465 8.006109 8.006244 7.999322 7.995647 8.004889 8.000151 7.993986

#> [897] 7.999818 7.991608 7.998606 7.998745 8.000750 7.995172 8.001522 8.005184

#> [905] 8.008769 7.994620 8.000849 8.001105 8.000597 7.995034 8.003281 7.999004

#> [913] 7.996932 7.999892 7.995818 8.011525 8.004843 7.999841 8.003156 7.998251

#> [921] 7.991937 8.009033 7.999846 7.996438 8.003225 7.998442 7.996140 7.996396

#> [929] 8.000022 8.005560 7.999502 7.993820 7.998693 7.995924 7.997602 8.003904

#> [937] 7.998516 7.991641 8.001246 8.002335 8.004942 7.997506 7.998937 7.995803

#> [945] 8.003265 8.005637 8.001930 7.998847 8.001632 8.003762 8.002825 7.988583

#> [953] 7.997631 7.994641 7.997859 7.998321 8.002305 7.995689 7.999937 8.005004

#> [961] 7.998045 8.001132 7.999001 7.996406 8.002577 8.005253 8.005391 7.997997

#> [969] 7.999156 8.010823 8.002178 8.001556 7.999361 7.995187 7.998069 7.998839

#> [977] 7.997726 8.001498 8.002995 8.006824 7.993380 7.998464 8.000051 7.992089

#> [985] 8.007963 7.998615 7.999981 7.999455 8.000510 7.998677 8.001354 7.997529

#> [993] 8.002342 8.001422 7.998963 8.003914 8.000152 7.997749 8.004205 8.004137Distributions of \(\hat \beta_1\)

Central Limit Theorem

Sampling Distribution

Simulating Unicorns

Central Limit Theorem

Common Sampling Distributions

Sampling Distributions for Regression Models

Scientific Notation

Central Limit Theorem

The Central Limit Theorem (CLT) is a fundamental concept in probability and statistics. It states that the distribution of the sum (or average) of a large number of independent, identically distributed (i.i.d.) random variables will be approximately normal, regardless of the underlying distribution of those individual variables.

Formal Statement of the CLT

- Let \(X_1\), \(X_2\), …, \(X_n\) be a sequence of i.i.d. random variables with mean \(\mu\) and standard deviation \(\sigma\).

- Let \(\bar X\) be the sample mean of these variables.

- As n (the sample size) approaches infinity, the distribution of \(\bar X\) approaches a normal distribution with:

- Mean: \(\mu\)

- Standard Deviation: \(\sigma/\sqrt{n}\)

CLT Example

- Imagine: You’re flipping a fair coin many times.

- Each flip is an independent event (heads or tails).

- The probability of heads/tails is the same for each flip.

- Now: Calculate the average number of heads after each set of 10 flips, then each set of 100 flips, and so on.

- Observation: As the number of flips in each set increases, the distribution of these averages will start to resemble a bell-shaped curve (normal distribution), even though the individual coin flips are not normally distributed.

CLT Implications

- Approximation: Even if the underlying data is not normally distributed, the distribution of the sample means will be approximately normal for large enough sample sizes.

- Practical Rule: A common rule of thumb is that the sample size (n) should be at least 30 for the CLT to provide a good approximation. However, this is a guideline, and the actual required sample size can vary depending on the shape of the original distribution.

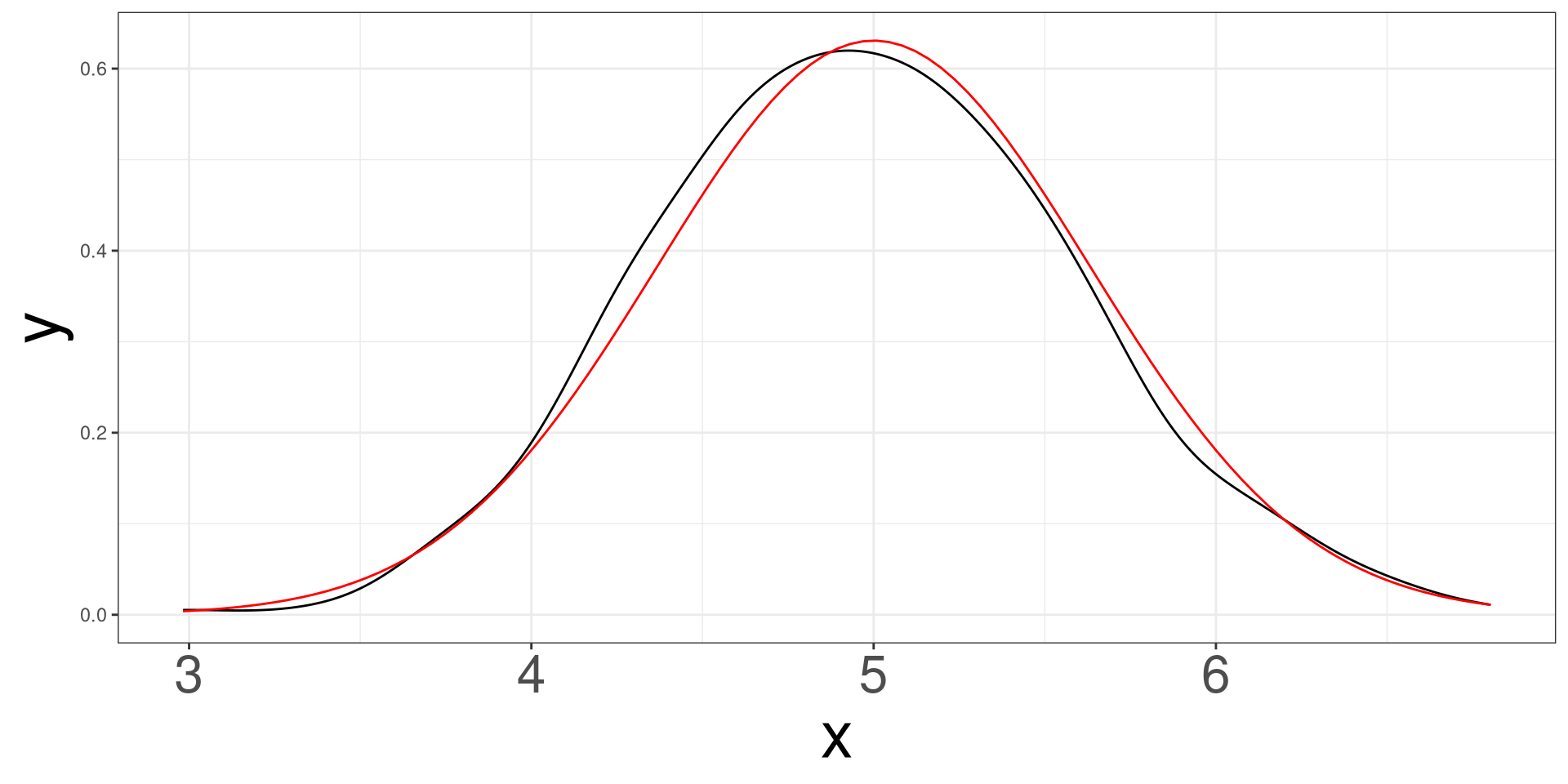

Normal Example \(n = 10\)

Simulating 500 samples of size 10 from a normal distribution with mean 5 and standard deviation of 2.

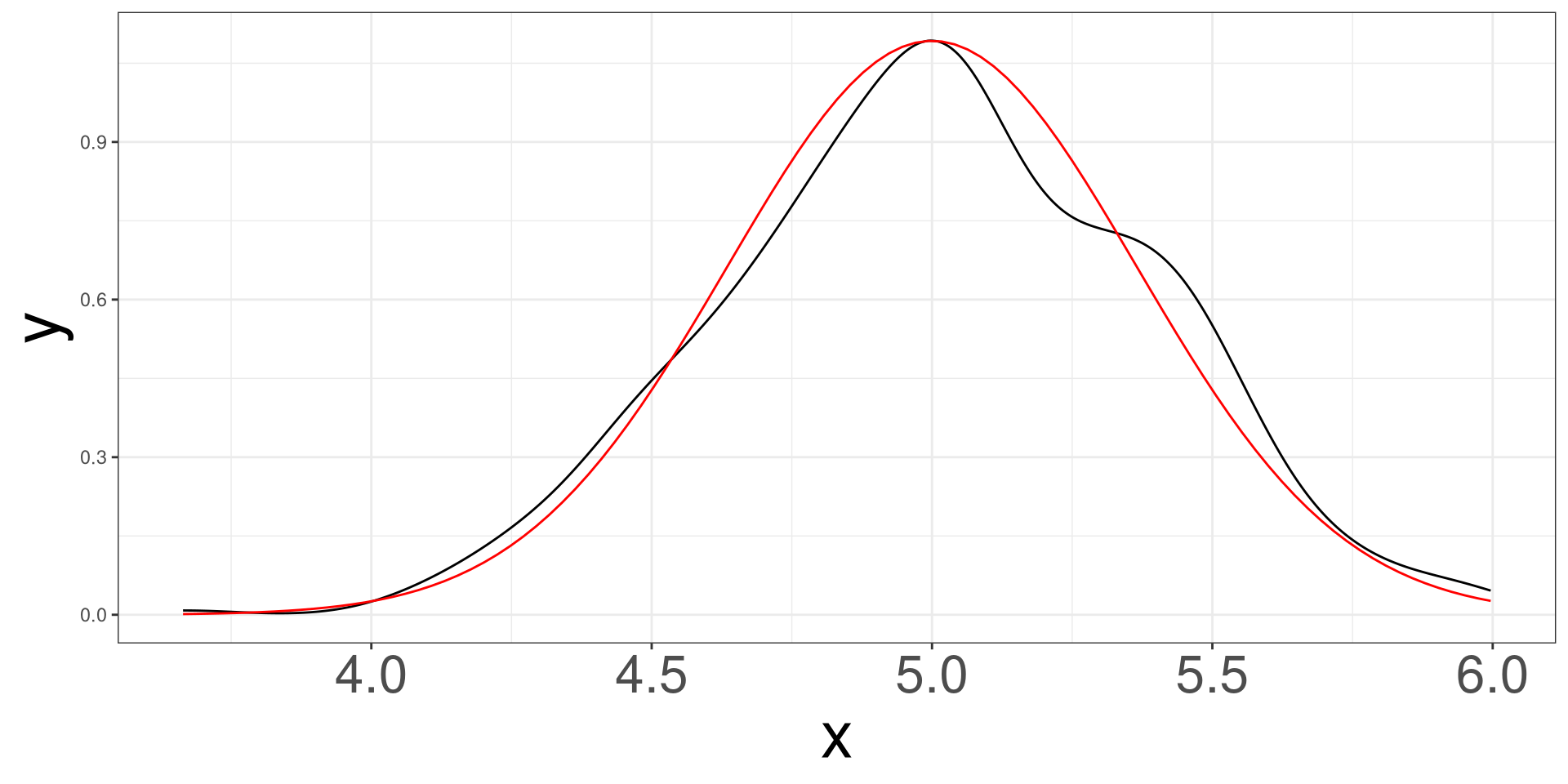

Normal Example \(n = 30\)

Simulating 500 samples of size 30 from a normal distribution with mean 5 and standard deviation of 2.

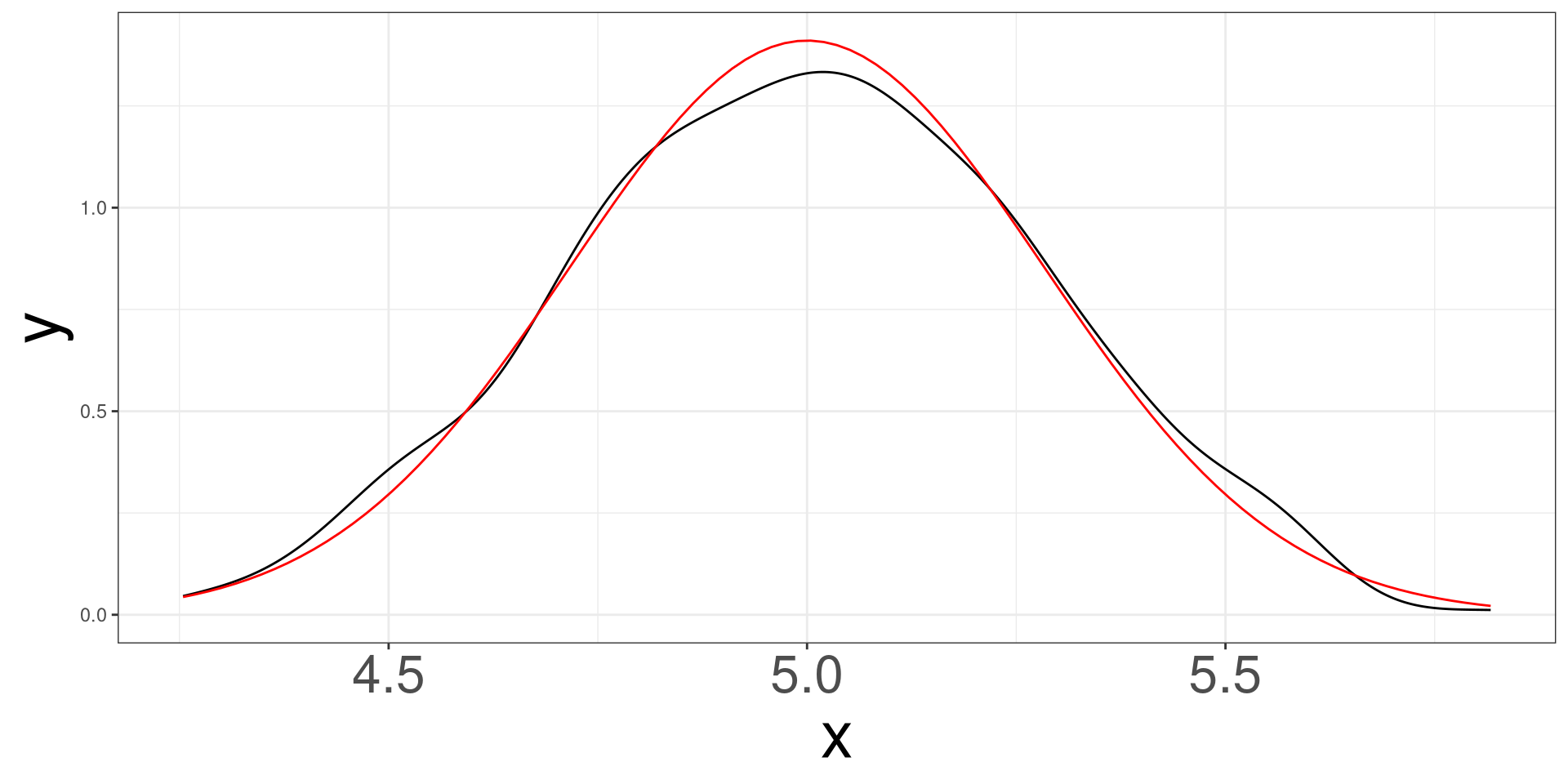

Normal Example \(n = 50\)

Simulating 500 samples of size 50 from a normal distribution with mean 5 and standard deviation of 2.

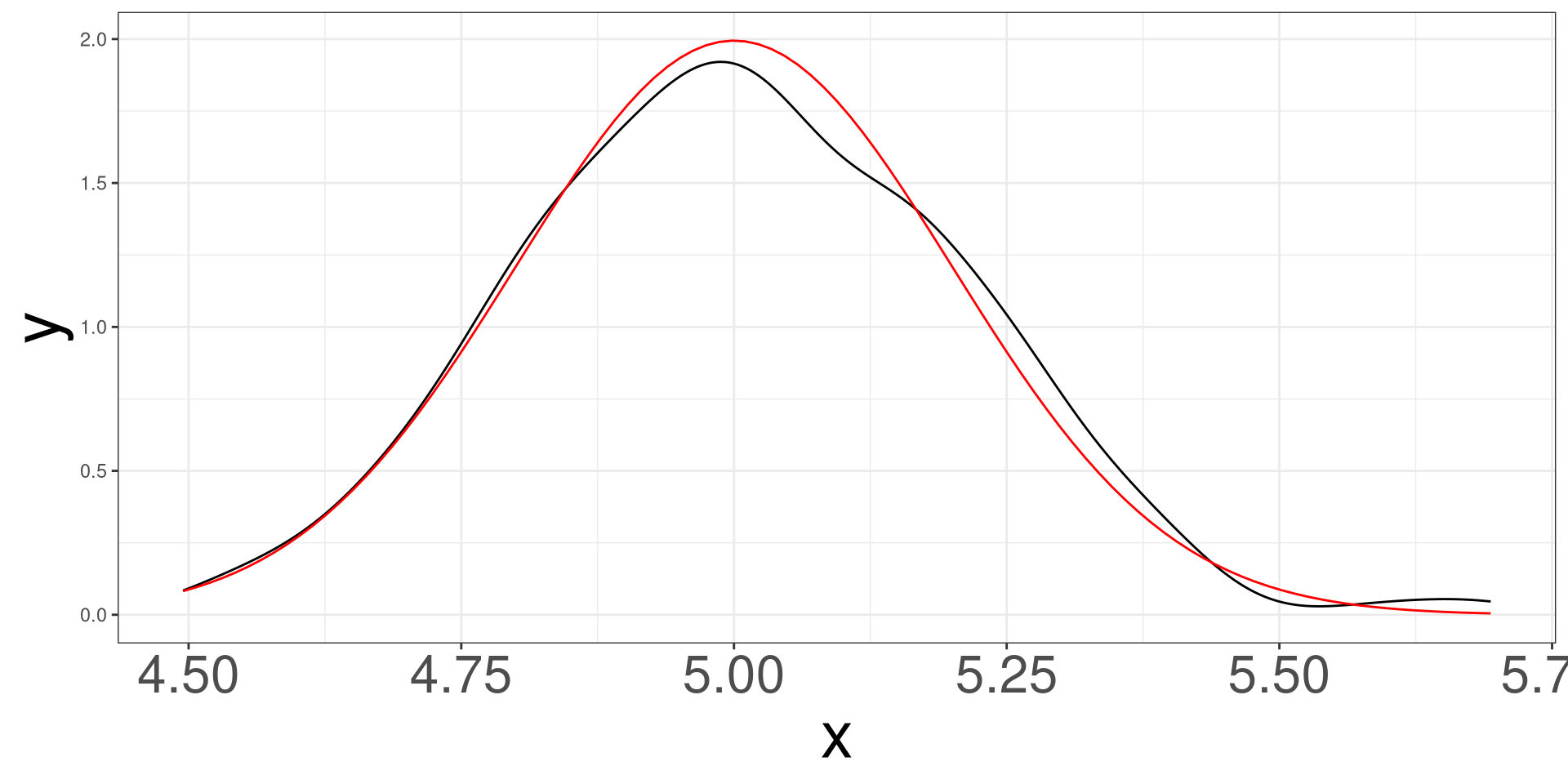

Normal Example \(n = 100\)

Simulating 500 samples of size 100 from a normal distribution with mean 5 and standard deviation of 2.

Common Sampling Distributions

Sampling Distribution

Simulating Unicorns

Central Limit Theorem

Common Sampling Distributions

Sampling Distributions for Regression Models

Scientific Notation

Normal DGP

When the data is said to have a normal distribution (DGP), there are special properties with both the mean and standard deviation, regardless of sample size.

Statistics

Mean \[ \bar X = \sum ^n_{i=1} X_i \]

Standard Deviation \[ s^2 = \frac{1}{n}\sum ^n_{i=1} (X_i - \bar X)^2 \]

When the true \(\mu\) and \(\sigma\) are known

A data sample of size \(n\) is generated from: \[ X_i \sim N(\mu, \sigma) \]

Distribution of \(\bar X\)

\[ \bar X \sim N(\mu, \sigma/\sqrt{n}) \]

Distribution of Z

\[ Z = \frac{\bar X - \mu}{\sigma/\sqrt{n}} \sim N(0,1) \]

When the true \(\mu\) and \(\sigma\) are unknown

A data sample of size \(n\) is generated from: \[ X_i \sim N(\mu, \sigma) \]

Distribution of \(s^2\) (unknown \(\mu\))

\[ (n-1)s^2/\sigma^2 \sim \chi^2(n-1) \]

Distribution of Z (unknown \(\sigma\))

\[ Z = \frac{\bar X - \mu}{\sigma/\sqrt{n}} \rightarrow \frac{\bar X - \mu}{s/\sqrt{n}} \sim t(n-1) \]

Sampling Distributions for Regression Models

Sampling Distribution

Simulating Unicorns

Central Limit Theorem

Common Sampling Distributions

Sampling Distributions for Regression Models

Scientific Notation

Regression Coefficients

The estimates of regression coefficients (slopes) have a distribution!

Based on our outcome, we will have 2 different distributions to work with: Normal or t.

Linear Regression

\[ \frac{\hat\beta_j-\beta_j}{\mathrm{se}(\hat\beta_j)} \sim t_{n-p^\prime} \]

\(\beta_j = 0\)

\[ \frac{\hat\beta_j}{\mathrm{se}(\hat\beta_j)} \sim t_{n-p^\prime} \]

Logistic Regression

\[ \frac{\hat\beta_j - \beta_j}{\mathrm{se}(\hat\beta_j)} \sim N(0,1) \]

\(\beta_j = 0\)

\[ \frac{\hat\beta_j}{\mathrm{se}(\hat\beta_j)} \sim N(0,1) \]

Scientific Notation

Sampling Distribution

Simulating Unicorns

Central Limit Theorem

Common Sampling Distributions

Sampling Distributions for Regression Models

Scientific Notation

Scientific Notation

We often work with very large or very small numbers.

- Earth → Sun distance: 150,000,000 km

- Diameter of a hydrogen atom: 0.0000000001 m

Problems with standard form:

- Hard to read

- Easy to copy wrong

- Difficult to compare

Scientific notation makes numbers compact and standardized.

The Scientific Notation Form

A number is in scientific notation if:

\[ a \times 10^n \]

where:

- \(a\) is at least 1 and less than 10

- \(n\) is an integer (positive, negative, or zero)

- \(10^n\) is a power of ten

Example: Large Number

Write 45,000 in scientific notation.

Move decimal:

\[ 45000 \rightarrow 4.5 \]

Moved 4 places left:

\[ 4.5 \times 10^4 \]

Example: Small Number

Write 0.00072 in scientific notation.

Move decimal:

\[ 0.00072 \rightarrow 7.2 \]

Moved 4 places right:

\[ 7.2 \times 10^{-4} \]

Understanding Positive Exponent

Positive exponents → big numbers

- \(10^3 = 1{,}000\)

- \(10^6 = 1{,}000{,}000\)

Example:

\[ 2.1 \times 10^6 = 2{,}100{,}000 \]

Understanding Negative Exponent

Negative exponents → small numbers

- \(10^{-2} = 0.01\)

- \(10^{-5} = 0.00001\)

Example:

\[ 4.3 \times 10^{-3} = 0.0043 \]

Converting Back to Standard Form

Rule:

- \(10^{+n}\): move decimal right \(n\) places

- \(10^{-n}\): move decimal left \(n\) places

Convert Example (Positive Exponent)

\[ 6.2 \times 10^5 \]

Move decimal 5 places right:

\[ 620{,}000 \]

Convert Example (Negative Exponent)

\[ 9.1 \times 10^{-4} \]

Move decimal 4 places left:

\[ 0.00091 \]

Comparing Numbers in Scientific Notation

Step 1: Compare exponents

- Bigger exponent → bigger number

Step 2: If exponents match, compare coefficients \(a\)

Example:

- \(3.2 \times 10^5\)

- \(7.1 \times 10^4\)

Since \(10^5 > 10^4\), the first number is larger.

Scientific Notation in R

R often displays very large/small numbers using e notation.

\[ a \times 10^n \quad \text{is shown as} \quad a\text{e}n \]

Examples:

3e+06means \(3 \times 10^6\)4.5e-04means \(4.5 \times 10^{-4}\)

m201.inqs.info/lectures/9